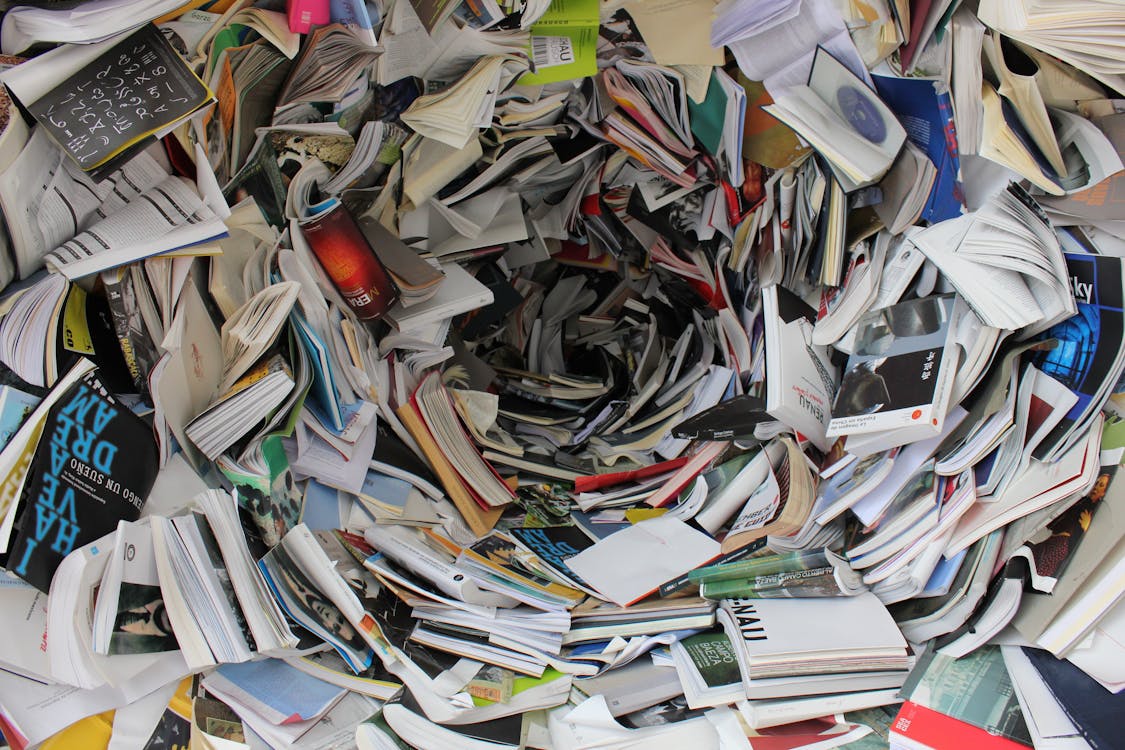

With millions of scientific papers published every year, researchers are drowning in data, and artificial intelligence (AI) tools like Elicit and Consensus promise to cut through the noise, speeding up literature reviews and transforming how scientists access knowledge. But can they truly replace human intuition and critical thinking?

AI has already revolutionized many aspects of scientific research, from complex data analysis to disease detection. Now, AI-powered search engines are addressing one of the most tedious tasks in research: wading through thousands of papers to find relevant insights.

In an article published by Nature yesterday, October 10, experts discussed how AI search engines like Consensus and Elicit offer new ways to quickly find and summarize research findings.

Eric Olson, CEO of Consensus, stated that while AI tools cannot replace a deep dive into scientific studies, they provide a fast, high-level overview that saves researchers valuable time. This time-saving function is critical in precision-driven fields.

Even the National Aeronautics and Space Administration (NASA) has adopted AI search engines to help its scientists sift through massive data sets across its many research centers. The AI-powered Science Discovery Engine (SDE), allows NASA researchers to search its vast open science data repository in a centralized manner. This tool has streamlined access to their astrophysics and earth science data and cut down a significant amount of search times.

AI-powered tools also have a role in making research more inclusive. Language models can translate research papers and summarize findings in multiple languages, allowing historically limited researchers access to diverse scientific literature.

Yet all behind these advantages are some limiting factors that may be uncontrollable for now, at least in the AI domain. AI search engines have been known to “hallucinate.” This is the phenomenon of AI systems producing false information or misrepresenting research.

Alec Thomas, a sports scientist at the University of Lausanne, expressed concerns about the accuracy of AI-generated outputs, saying, “We wouldn’t trust a human that is known to hallucinate, so why would we trust an AI?”

His caution represents the need for human validation in AI-assisted research. Developers are still finding ways to address these issues by implementing safeguards, but users are advised to treat AI-generated summaries as supplementary to human oversight.

On the same note, Anna Mills, a professor mentioned in Nature, expressed how AI has been helpful in her profession, but encourages the use of critical thinking in assessing the information it provides first.

“Part of being a good scientist is being skeptical about everything, including your own methods,” said Conner Lambden, founder of BiologGPT, which is one of the many subject-specific AI tools that specially caters to biological queries.

AI-powered science search engines are undeniably making strides in reducing the time and cognitive load required for scientific research. However, their development is a work in progress, and researchers must remain vigilant about how they use these tools. Ultimately, AI is set to complement—not replace—human expertise in the long run.