Artificial Intelligence (AI) has transformed modern technology, driving industry advancements. However, it’s not without flaws. One significant challenge is AI hallucinations, where systems produce incorrect or nonsensical outputs. These errors reveal critical gaps in AI development, raising concerns about reliability and trust.

Understanding hallucinations is essential as AI applications grow in healthcare, finance, and education. In this article, we’ll explore what AI hallucinations are, their causes, and their real-world implications in today’s rapidly evolving tech landscape.

What is an AI Hallucination?

An AI hallucination occurs when an AI system generates information that is false, irrelevant, or fabricated. These outputs may seem logical but have no connection to the training data or reality. The AI hallucination definition applies to systems like AI chatbots and image generators, which often experience these errors.

For example, a chatbot might confidently provide incorrect facts. An image generator could create visuals of objects that don’t exist. Understanding what AI hallucinations are is crucial, as it highlights vulnerabilities in AI systems and raises concerns about their reliability in critical fields. AI systems, like chatbots and image generators, rely on NLP to process language. This reliance can lead to hallucinations when models fabricate information.

Historical Context of AI Hallucinations

The concept of AI hallucinations began to surface during the 2010s as AI systems became more advanced. Early instances were noticed in image recognition tools that misidentified patterns or objects. Generative models, like chatbots and large language models (LLMs), later displayed similar issues by producing fabricated outputs.

Researchers linked these problems to biases in training data and limitations in algorithms. The term “AI hallucination” was coined to describe these errors and their unpredictable nature. Over time, addressing hallucinations became a major focus in AI research. This effort aimed to improve accuracy and build trust in AI-driven applications.

How Do AI Hallucinations Occur?

AI hallucinations occur when AI models generate false or nonsensical outputs. These errors often stem from training data issues or model design flaws. AI systems rely on training data to find patterns and make predictions. When the data is incomplete, biased, or flawed, the model learns incorrect patterns, which leads to hallucinations. For instance, a medical AI trained on biased datasets might mislabel healthy tissue as cancerous.

Another common cause is poor grounding. Poor grounding in real-world contexts often leads to AI hallucinations. Limitations in NLP algorithms also contribute to errors. These issues result in outputs that seem logical but lack factual accuracy.

AI systems often struggle to align predictions with real-world facts, leading to outputs that seem plausible but are ultimately false. Examples include fabricated news summaries or links to non-existent web pages. These issues are especially common in AI applications such as large language models (LLMs), which rely heavily on probabilistic predictions to generate content. This reliance makes them particularly prone to errors, underscoring the need for caution in their use across various fields.

Improving data quality is a key step to reducing hallucinations. Grounding models in real-world contexts also helps address this issue. Additionally, refining algorithms ensure AI systems are more reliable. Together, these measures improve the accuracy of AI across different applications.

Types of AI Hallucinations

AI hallucinations can be classified into three main types:

- Semantic Hallucinations: These happen when an AI generates well-formed, factually incorrect, or irrelevant text. For example, a chatbot might provide a confident but false response to a question.

- Logical Inconsistencies: In this type, AI outputs contradict basic reasoning or logic. An example could be an AI suggesting conflicting steps in a problem-solving task.

- Visual Distortions: Common in generative models, these occur when AI produces unrealistic or nonsensical images. For instance, an AI might generate a person with extra limbs or distorted features.

Common Examples of AI Hallucinations

AI hallucinations are a problem in many industries. They show the challenge of ensuring accurate and reliable outputs. Below are three key examples:

Misinformation in Generative Text:

Generative AI systems like ChatGPT or Google Bard often produce false, convincing information. For example, Google Bard once claimed the James Webb Space Telescope captured the first images of an exoplanet. This statement was utterly false. Such errors can spread misinformation, reduce user trust, and create reputational risks for businesses that depend on AI.

Misinterpretation in Image Recognition:

In healthcare, AI models sometimes misinterpret medical images due to hallucinated patterns. For instance, an AI system might label healthy tissue as cancerous if its training data lacks variety. These mistakes can lead to unnecessary treatments and missed diagnoses, posing serious risks for patients.

Errors in Recommendation Systems:

AI systems in e-commerce or streaming platforms sometimes provide irrelevant or nonsensical suggestions. For example, a shopping platform might recommend unrelated products based on a user’s history. Such errors frustrate users and harm business revenue.

AI Hallucinations in Generative AI

AI hallucinations are common in generative systems like large language models (LLMs) and text-to-image tools. Below are specific cases:

- False Information in Text: ChatGPT and similar models often generate incorrect responses. For example, they might confidently provide fabricated references or historical inaccuracies. These outputs mislead users and reduce trust in the system.

- Distorted Visual Outputs: Text-to-image tools like DALL-E sometimes create nonsensical visuals. Examples include generating human faces with extra limbs. They may also produce objects that defy natural physics, such as shadows in impossible directions.

- Invented Contexts: LLMs frequently misunderstand prompts, leading to hallucinations like irrelevant or made-up facts. A user asking about real-world events might receive responses based on fictional scenarios.

- Creative Misfires: Generative AI often adds creative elements that don’t align with reality. For example, it might create imaginary locations or invent non-existent concepts when asked scientific questions.

Addressing these issues is essential to improving the accuracy of generative AI systems. This ensures they are more reliable and usable in practical applications.

Why AI Hallucinations Matter

Hallucinations create risks that affect trust in AI, business operations, and society. Understanding these impacts is critical as AI becomes more integrated into everyday life.

- Trust in AI: Hallucinations reduce user trust. When AI systems, like chatbots, provide false or nonsensical outputs, users lose trust. For example, a chatbot’s bad medical advice can create skepticism and discourage adoption.

- Business Operations: AI hallucinations disrupt business efficiency and decision-making. In healthcare, hallucinations in medical imaging systems might lead to misdiagnoses. This can result in unnecessary treatments, legal challenges, and reputational harm. In finance, hallucinated predictions can cause flawed business strategies and financial losses.

- Social Implications: On a societal level, AI hallucinations contribute to misinformation. Generative AI systems that fabricate news or create false details can mislead the public. This increases confusion and mistrust in institutions, fueling societal challenges like polarization.

Impact on Industries

AI hallucinations affect several critical industries, creating challenges in maintaining reliability and trust. Below is an overview of their impact on healthcare, finance, and education.

- Healthcare: Hallucinations in AI-powered tools can lead to serious consequences in healthcare. For example, medical imaging systems might misdiagnose conditions or identify patterns that do not exist. Such errors can result in unnecessary treatments or missed diagnoses. Patients’ trust in AI healthcare solutions drops when they fail to give accurate results.

- Finance: In finance, hallucinations can disrupt decision-making and cause financial losses. AI models used for market analysis or fraud detection may generate false insights. For instance, an AI tool might flag valid transactions as fraud. It could also make wrong stock predictions. These errors can harm businesses and lead to costly decisions.

- Education: AI systems sometimes deliver fabricated or misleading information to students. For example, an AI tutor might incorrectly answer complex queries or misrepresent history. These hallucinations hinder the learning process and reduce trust in educational technology.

Addressing these issues is vital to ensure AI remains a beneficial tool in these industries. Developers must improve data quality and refine algorithms. They also need to provide oversight to minimize errors and build trust.

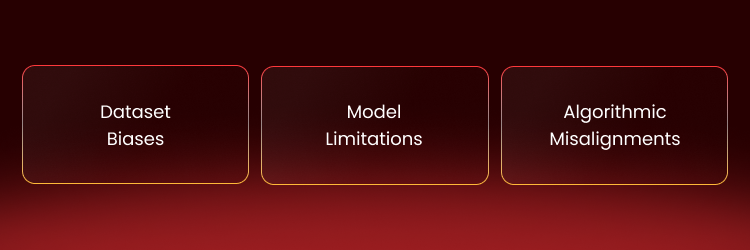

Causes of AI Hallucinations

AI hallucinations result from flaws in data, model design, and algorithms. Below are the leading causes:

- Dataset Biases: AI systems depend on training data to make predictions. The model learns flawed patterns when the data is biased, incomplete, or inaccurate. For example, outdated or region-specific data can lead to hallucinated responses. In image recognition, biased datasets can cause AI to misidentify objects or create nonsense images.

- Model Limitations: LLMs generate outputs based on probabilities rather than real-world understanding. This often leads to hallucinations, such as fabricated text or distorted visuals. Text-to-image models, for instance, might produce images that lack realistic details.

- Algorithmic Misalignments: Algorithms are designed to optimize specific objectives. If these goals conflict, AI may prioritize fluency or creativity over accuracy. For instance, a content generator might fabricate details to make outputs more engaging.

Addressing these causes requires better training datasets and improved algorithms. Models must also align closely with real-world objectives. Another key step is to verify outputs. This will reduce hallucinations and improve AI reliability.

Role of Data in AI Hallucinations

Poor-quality data is a key cause of AI hallucinations. When training datasets are biased, incomplete, or inaccurate, AI models learn flawed patterns. These flaws often lead to false or nonsensical outputs. For example, a model trained on region-specific data may struggle with unfamiliar contexts.

This can result in hallucinated responses. In image recognition, unbalanced datasets often cause misidentifications or unrealistic visuals. Data quality also affects how well AI systems handle new tasks or scenarios. Using diverse, accurate, and carefully curated datasets is essential. This helps reduce hallucinations and improves the reliability of AI systems.

Can AI Hallucinations Be Prevented?

Preventing AI hallucinations is difficult, but several strategies can help reduce their occurrence. High-quality datasets are essential. Diverse and unbiased data ensures AI models learn accurate patterns. Regular model updates using fresh data also help reduce outdated or fabricated outputs.

Refining algorithms to focus on accuracy rather than fluency can further lower the risk of hallucinations. Human oversight is another crucial step. In fields like healthcare and finance, reviewing AI-generated outputs helps catch errors early.

However, challenges remain. Large language models rely on probabilities, which makes occasional inaccuracies inevitable. Eliminating biases from training data is also a complex task. Some errors may still occur even with the best practices in place.

Developers must improve data handling and refine algorithms, as well as ensure human oversight, to reduce these risks. Complete prevention may not be possible. However, these steps can greatly reduce hallucinations and improve AI reliability in many apps.

Methods to Reduce Hallucinations

- Improved Dataset Quality: Using diverse, accurate, and unbiased data reduces errors. High-quality datasets help AI models learn reliable patterns. They avoid hallucinations from flawed training inputs.

- Rigorous Validation Processes: Regular testing of AI systems against real-world scenarios identifies issues. Validation ensures outputs are logical, factual, and consistent with user expectations.

- Enhanced Feedback Loops: Feedback from users or experts allows AI systems to adapt and improve over time. Continuous learning corrects errors and minimizes repeated hallucinations.

Future of AI and Hallucinations

Advancements in AI technology offer solutions to reduce AI hallucinations. Using larger and more diverse datasets can help models learn accurate patterns. Grounding techniques validate outputs against real-world facts, lowering the risk of errors. These methods address the core of what is AI hallucinations by reducing nonsensical or false responses.

Algorithms with improved context understanding are another key development. They ensure outputs align with expectations, reducing the likelihood of hallucinations. Tools for transparency, like explainability features, make it easier for users to detect issues. These steps address the AI hallucination definition by focusing on improving system accuracy.

New challenges will arise as AI becomes more advanced. Biases in training data and the complexity of large systems remain concerns. Developers must address these issues while refining their models. AI systems can improve processes, making them more reliable and avoiding the risks shown in AI hallucination examples.

Ethical Considerations

AI hallucinations raise critical ethical concerns, especially in high-stakes fields. In healthcare, hallucinations can lead to misdiagnoses or inappropriate treatments. These errors pose serious risks to patient safety. In finance, false outputs may result in flawed decisions and financial losses. Misinformation from generative AI can increase distrust and divide society.

Developers must focus on transparency and accountability to address these issues. Ethical AI practices, such as using diverse and unbiased datasets, are essential. Educating users about what is AI hallucinations is essential for promoting responsible use. Understanding the limitations of AI systems also helps users make informed decisions. Balancing innovation with ethics can reduce AI hallucinations and build trust in AI systems.

How to Spot AI Hallucinations

Identifying AI hallucinations is crucial for ensuring accuracy and trust in AI systems. Users should always cross-verify AI-generated content against reliable sources. This helps find errors or falsehoods, especially in AI text tools. Monitoring patterns in outputs is another effective strategy. Hallucinations often show logical contradictions, irrelevant details, or overly confident yet wrong responses.

Developers should focus on analyzing training data quality. Low-quality or biased datasets often lead to hallucinations. Using diverse and accurate data minimizes these risks. Incorporating human feedback into AI systems is equally important. Regular input from users or experts helps fine-tune models and reduces recurring errors.

Validation tools can also play a key role in spotting hallucinations. These tools test the factual accuracy of AI-generated content and ensure alignment with real-world data. By combining these strategies, users and developers can effectively address hallucinations, enhancing the reliability of generative artificial intelligence systems.

FAQs About AI Hallucinations

What are the dangers of AI hallucinations?

AI hallucinations can lead to misinformation and harm decision-making. In healthcare, they may cause misdiagnoses. In finance, flawed outputs from a generative AI model can result in financial losses. Reducing hallucinations is essential to maintain trust in AI tools.

Can all AI models hallucinate?

Yes, most AI models, including generative AI models, can hallucinate. This often happens due to low-quality training data or algorithmic flaws. Better data and refined algorithms can lower these errors.

How do I stop AI from hallucinating?

You can prevent AI hallucinations by improving data quality and refining algorithms. Regular updates and validation tools also help ensure more accurate outputs. Human feedback is vital for reducing recurring errors.

How do hallucinations affect decision-making?

Hallucinations mislead users with false or irrelevant information. This can lead to flawed decisions, especially in business, education, or healthcare.

Are hallucinations unique to specific AI systems?

No, hallucinations occur in various systems, including generative AI models and chatbots. Factors like biased data and algorithmic limitations make some systems more prone to errors.

How are researchers addressing AI hallucinations?

Researchers focus on improving datasets and refining algorithms. Feedback loops and validation tools help reduce hallucinations, enhancing the reliability of AI tools across industries.

AI Hallucination: Conclusion

AI hallucinations reveal critical challenges in modern AI systems. These errors, caused by dataset biases, model limitations, and algorithmic flaws, undermine trust in AI. They create risks in industries like healthcare, finance, and education. Understanding what AI hallucinations are and their root causes is essential for improving reliability.

Preventing hallucinations requires high-quality data, refined algorithms, and regular validation processes. Human feedback helps reduce recurring errors and improves system performance. Transparency and ethical oversight are also key to maintaining trust in AI tools.

While hallucinations cannot be fully eliminated, their occurrence can be minimized. Addressing the flaws highlighted in AI hallucination examples helps developers create more accurate systems. This approach ensures AI technologies remain safe, reliable, and aligned with real-world needs.