A new study led by Dogan Gursoy, a professor of hospitality management at Washington State University’s Carson College of Business, reveals that products labeled as artificial intelligence (AI)-powered struggle to sell as well as those marketed as “high tech,” according to findings published in the Journal of Hospitality Market and Management.

They examined reactions from over 1,000 adults to various products ranging from televisions to vacuums to consumer and health services. Participants were shown the same item with different label descriptors—one as “high tech” and the other as “AI-powered.”.

The result was unanimous. “In every single case, the intention to buy or use the product or service was significantly lower whenever we mentioned AI in the product description,” Gursoy stated.

Consumer Trust and Perception

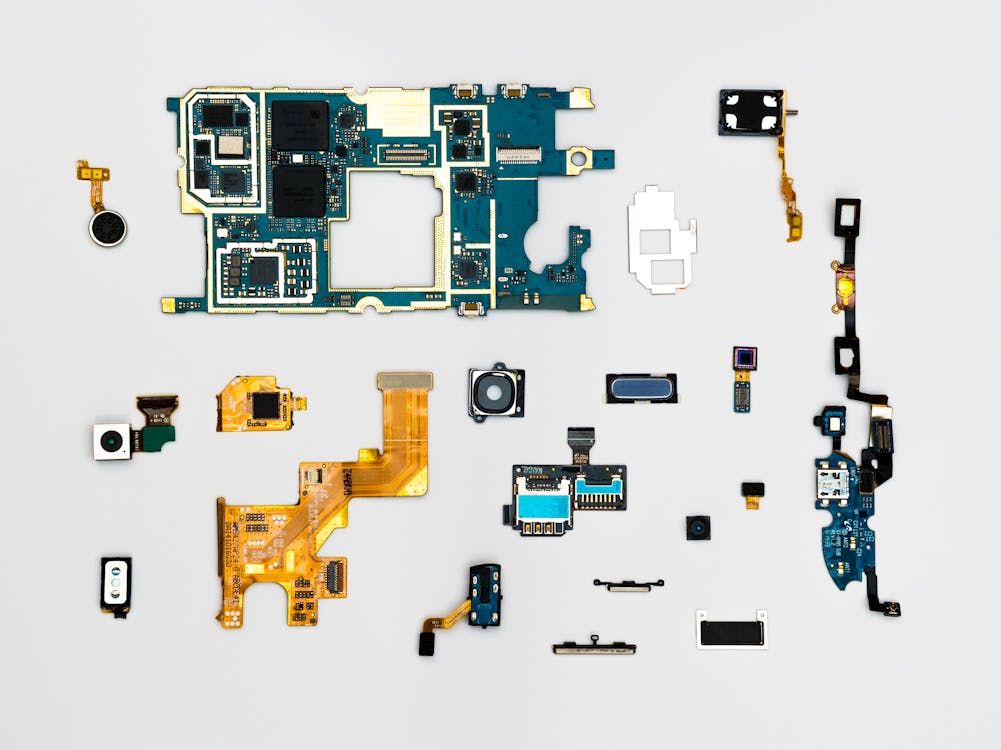

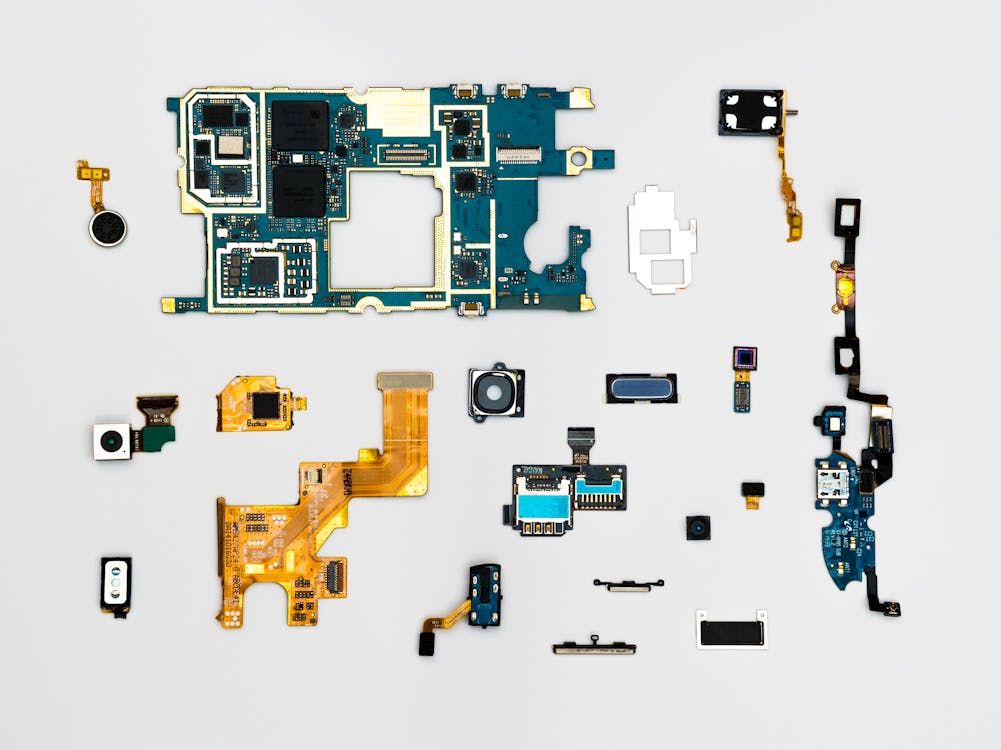

The study explores two kinds of trust affecting how people perceive AI products: cognitive trust and emotional trust. Cognitive trust relates to the expectation that machines should be free from human error. When AI systems fail, this trust is quickly eroded.

Emotional trust, on the other hand, deals with the fear and uncertainty of AI’s potential impact on their daily lives. Gursoy expounded on this matter, stating that limited knowledge and understanding of the inner workings of AI forces consumers to approach these products with caution.

The Fear of AI Takeover

Aversion and discomfort toward AI were found to be higher for “high-risk” offerings, which are products posing high significance and involving critical safety measurements, usually handled by professionals. It touches on the subject of the fear of being replaced by robots, a popular niche in some sci-fi and fantasy media.

“It threatens my identity, threatens the human identity,” Gursoy said. “Nothing’s supposed to be more intelligent than us.”

This fear is not unfounded, however. In Japan, for instance, the creative industry is grappling with the implications of AI-generated art. Catherine Thorbecke, writing for Bloomberg Opinion, noted that many Japanese artists feel their work is being used to train AI tools without their permission.

“Many feel that their work is being taken to create the very tools that then threaten their livelihoods,” Thorbecke wrote.

Moreover, consumer concerns over privacy have exacerbated fears around AI. According to Cisco’s 2023 Consumer Privacy Survey, many consumers feel their trust in AI has already been undermined by organizations using the technology irresponsibly. Instances like Amazon’s implementation of a palm-scanning payment system at Whole Foods have only heightened cybersecurity concerns.

Balancing Innovation and Safety

As AI technologies continue to evolve, balancing innovation with safety has become crucial. Trust and safety professionals are working closely with the AI community to address these complexities.

Agustina Callegari, Project Lead at the Global Coalition for Digital Safety, emphasized the importance of open discussions and robust safety measures. “Through open discussions and implementing robust safety measures, we can ensure AI technologies are harnessed responsibly for the benefit of all,” Callegari stated.

To encourage consumers to buy products that showcase the benefits of AI, brands must first alleviate concerns about the technology. This involves clearly communicating the advantages of AI-enhanced products and enhancing transparency regarding data practices.

As Gursoy pointed out, “Rather than simply putting ’AI-powered’ or ’run by AI,’ telling people how this can help them will ease the consumer’s fears.”